Pancake Plaisir is an Alexa Skill that helps people find and prepare tasty and indulgent - but quick - pancake meals using their voice.

“Hey Alexa, Open Pancake Plaisir and find me some pancakes for breakfast!”

Overview

| Amazon Alexa Developer Console

My Process

I used design thinking and best practices for VUI design to guide the design of Pancake Plaisir.Understand

Brief

To understand the problem I referred to documentation provided within the brief which clarified the problem, need and key findings from the competitive research. This gave me a platform to dive into the problem space and interpret the brief.

Key Assumptions

I contextualised the problem, viewing it from the perspective that:

Most cultures have a sweet and savoury pancake

Most cultures have some form of sweet or savoury pancake. Pancake Plaisir could serve as a useful encyclopaedia to celebrate the diversity of pancakes.

Pancake ingredients are simple

The ingredients for pancakes are typically considered household staples and are often available in the pantry.

You can get very creative

Pancakes afford a lot of creativity where people can really customise their pancakes to what they like

Visualise

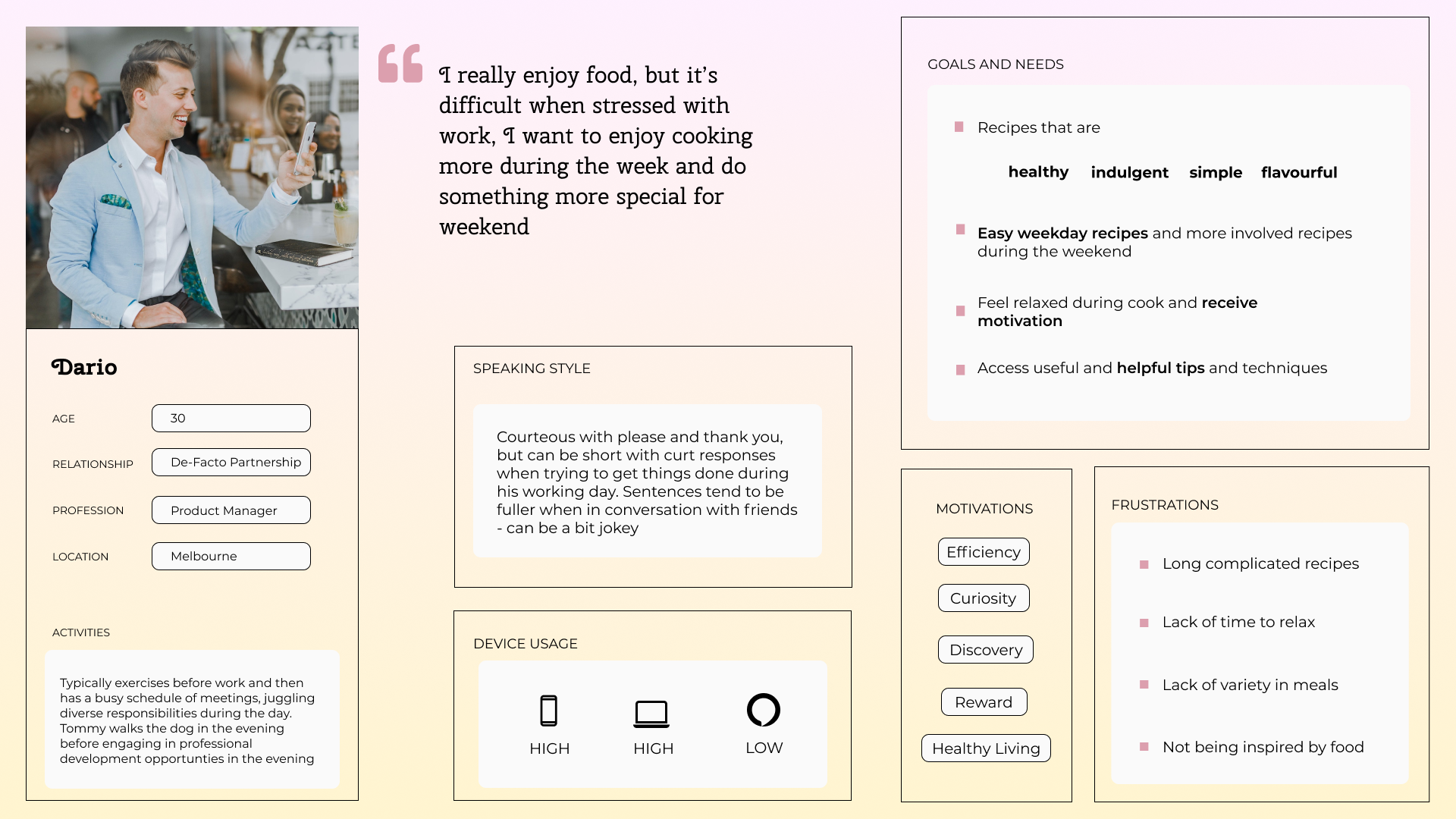

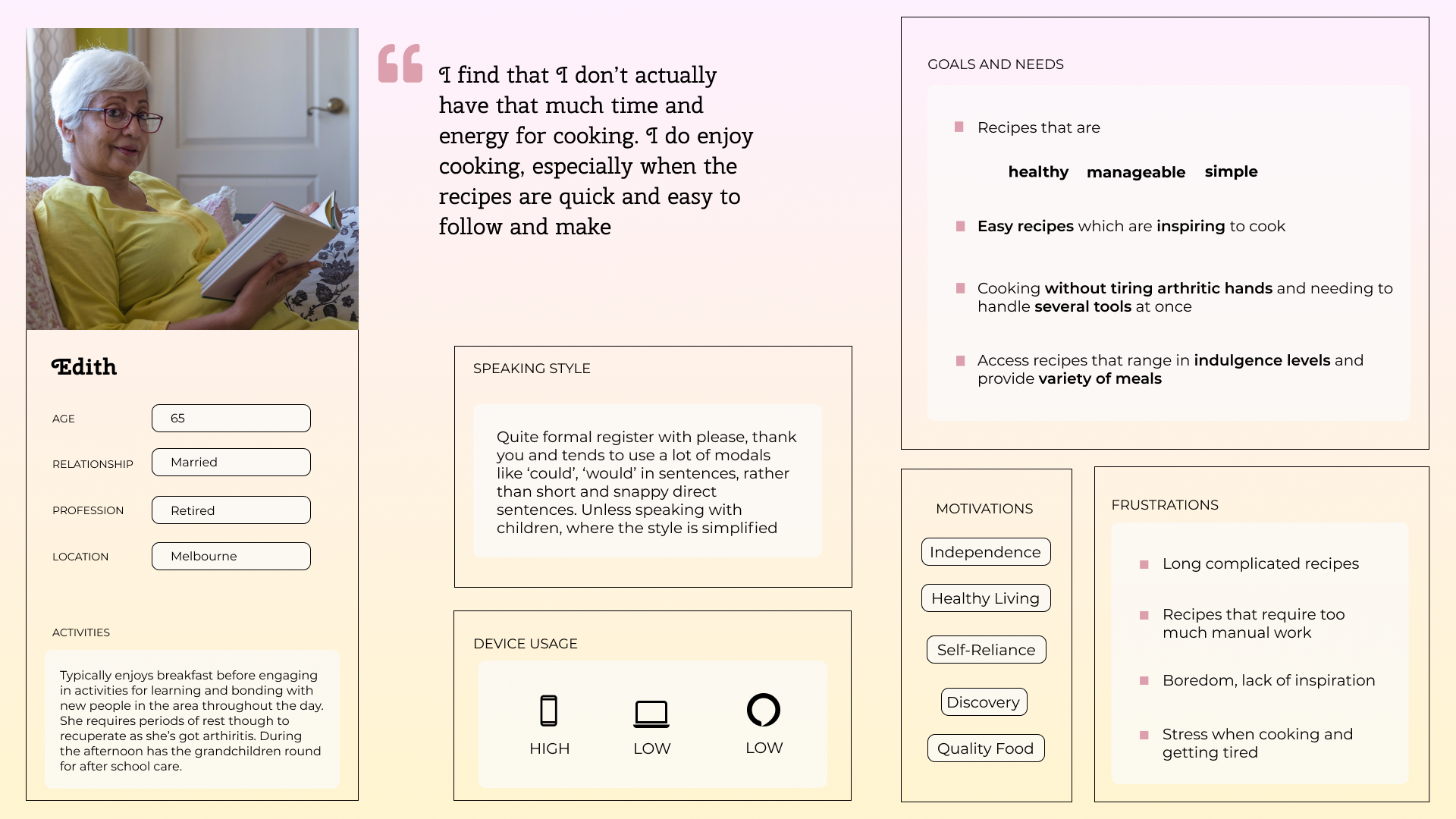

User Personas

I noted down my assumptions about potential users to create 2 proto-personas that would guide the design direction and make Pancake Plaisir more accessible.

I validated my assumptions by conducting 2 interviews and used the information to adapt the proto-personas.

Key Insights

Key Insights

I had direction for designing for a busy professional and for an an older person with arthritis, both are interested in eating well and making quick simple food.

For my second proto-persona I identified that hearing the recipes took the strain off their eyes and that the more tired their hands became, the more fiddlier it would be to use a device, like a tablet, so voice offers an alternative path for following a recipe.

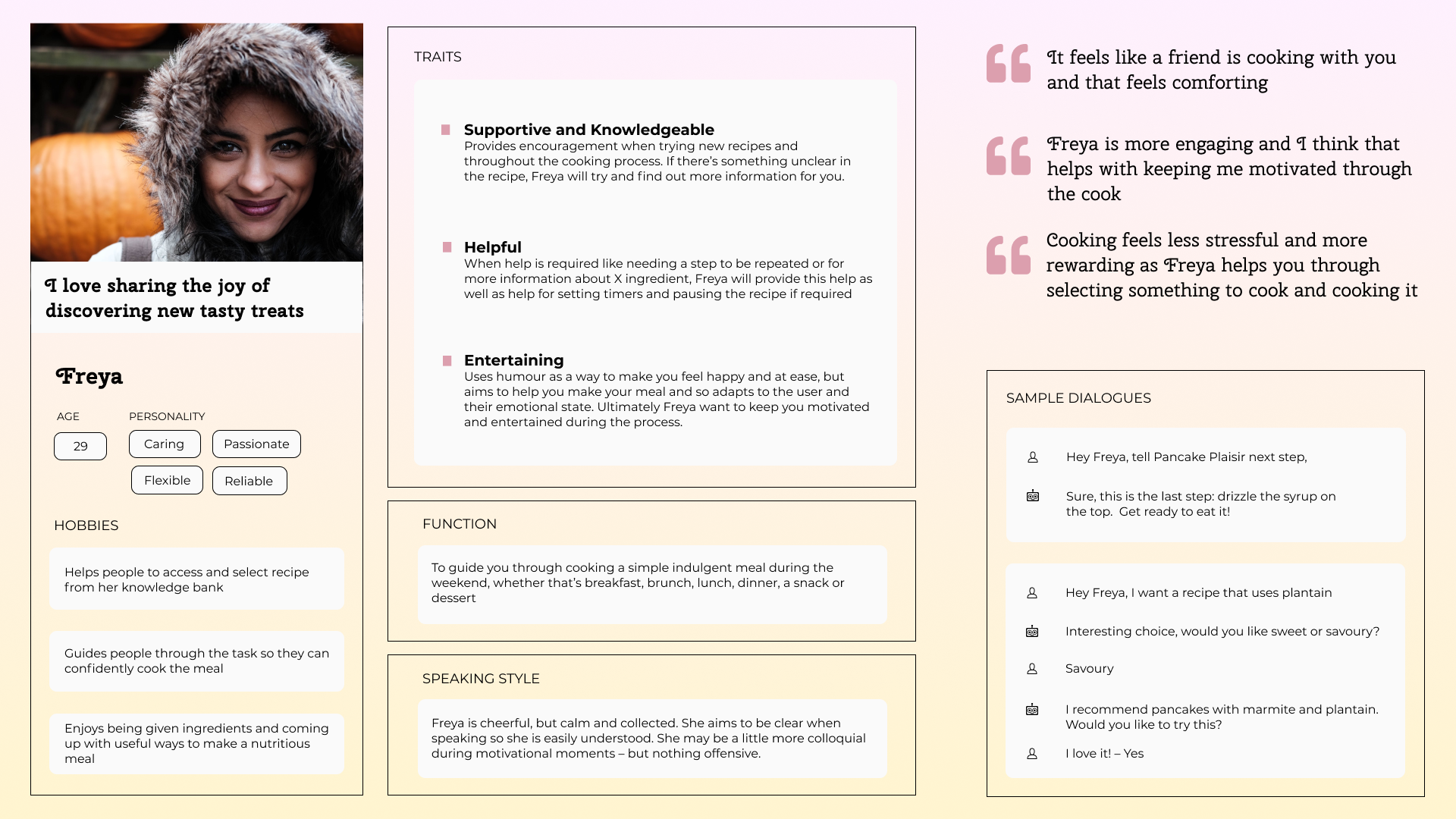

System Persona

Using my user personas, I moved onto creating the system persona - i.e. the persona of the skill. I needed to make sure that the system persona would suit my proto-personas, otherwise this could lead to a jarring experience.

Key Insights

Key Insights

The system persona would be like a knowledgeable friend or relative.

Humanising the persona is important as people generally ascribe human attributes to devices using voice. The system persona would be like a knowledgeable friend for my primary proto-persona and a helpful, caring nibling for my secondary proto-persona.

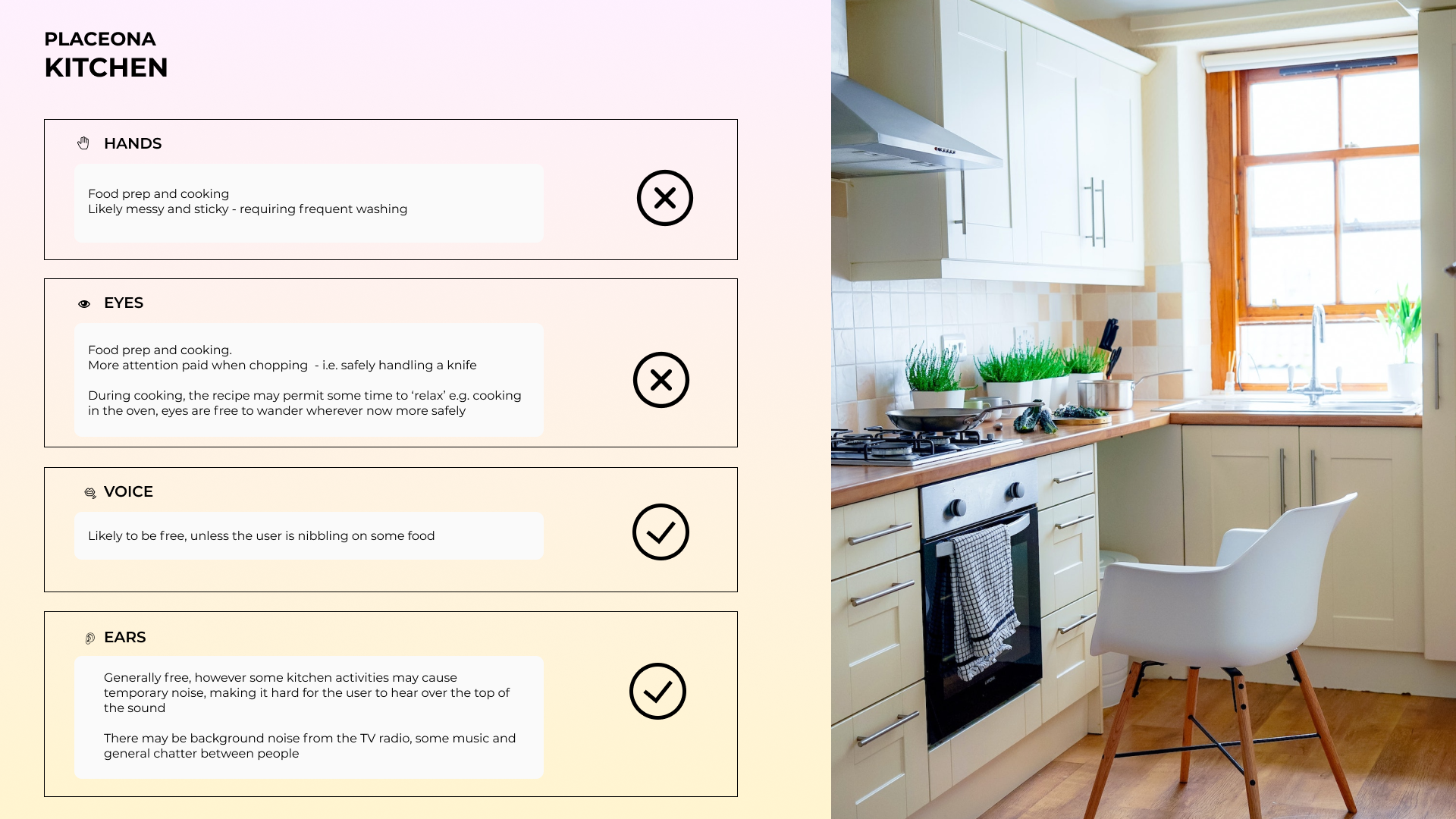

Placeona

To effectively design for voice we need to understand the context of the user, so I defined a placeona which describes the setting in which the voice experience will take place.

I needed to answer questions like:

Key Insights

Key Insights

The skill may be used in the kitchen or living room, tucked away, probably when their main attention is elsewhere.

I identified the common and somewhat idealised uses cases:

- During cooking when hands and eyes are busy, but ears and mouth are free - in the kitchen

- When trying to find a recipe, the quick VUI search cuts through complexity even if hands and eyes may be free - in the kitchen or in the living room

User Stories

Reviewing the personas, I identified the needs matching them to functionality the skill should include.

Key Insights

Key Insights

From this I established the intents of:

- Find recipe by meal type

- Find recipe by ingredient

- Receive random recipe

- Rate recipe

Writing and then grouping the user stories helped me to establish the intents (i.e. the functionality) and then prioritise them.

Recipe Database Creation

My skill would not be complete without recipes the user can access, so I created a database of recipes that could be grouped according to breakfast, lunch, dinner and snack. I also included a category for dessert as identified during my research.

Key Insights

The recipes would require tweaking to better accommodate a voice experience.

Though outside the scope of this particular iteration, I knew I needed to adapt the recipes to reduce cognitive load on short-term memory to support users in cooking the recipe.

Execute

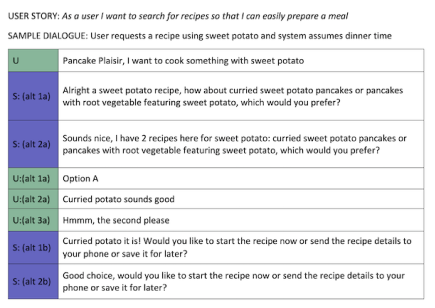

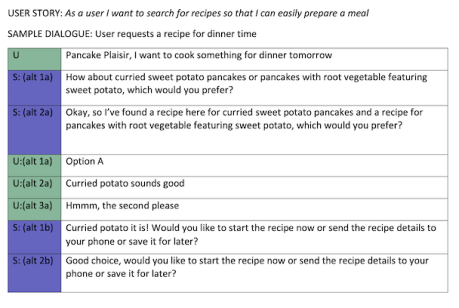

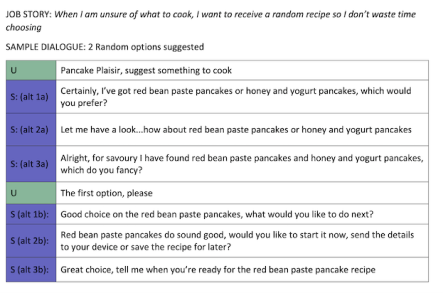

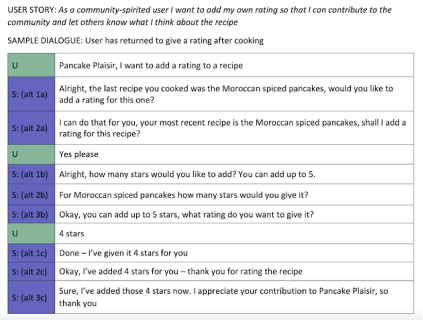

Sample Dialogues

From the prioritsed user stories I wrote sample dialogues which would help me to explore the interaction and dialogue between the user and system. These are my first initial thoughts of what the conversation could be like between the user and system.

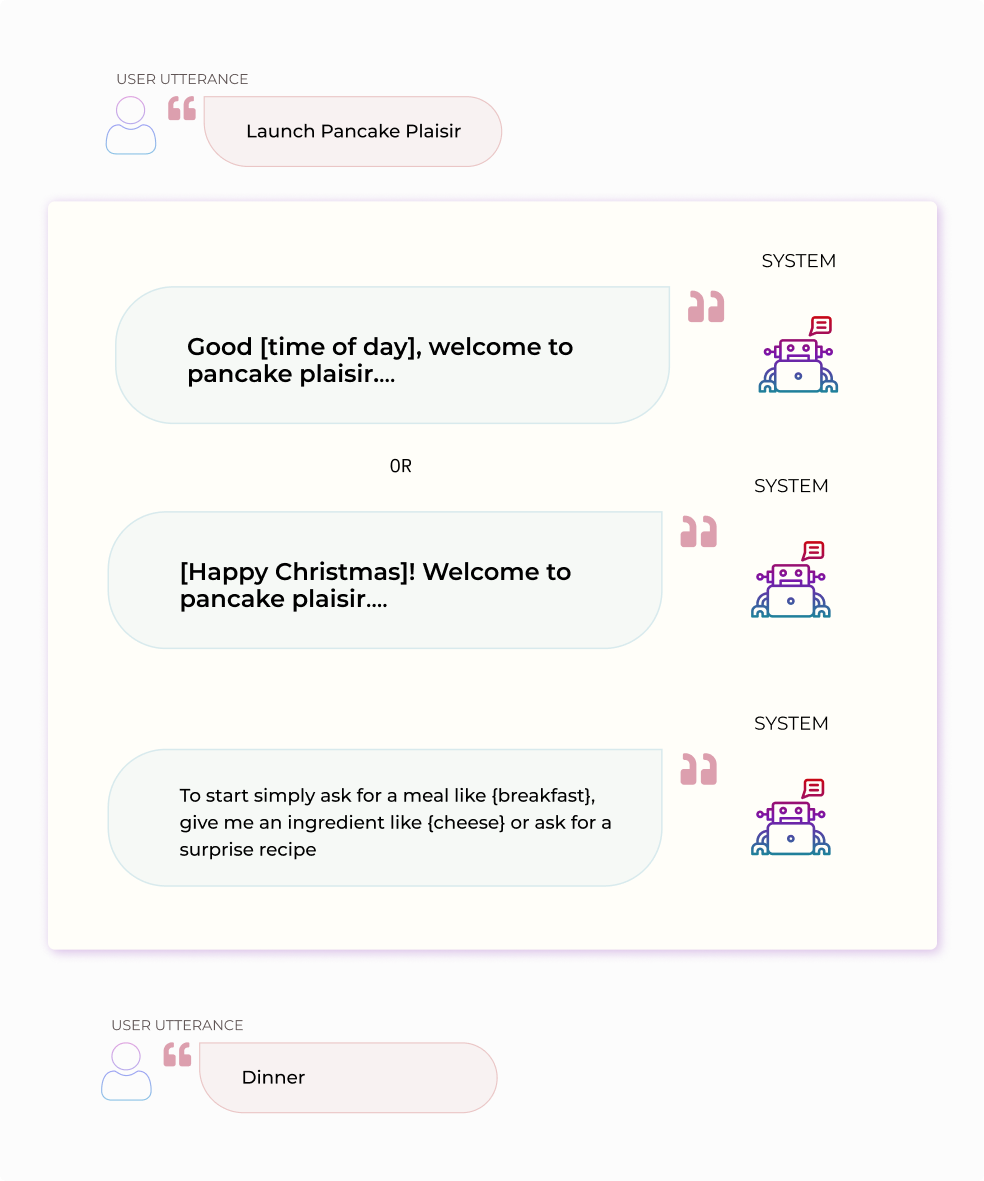

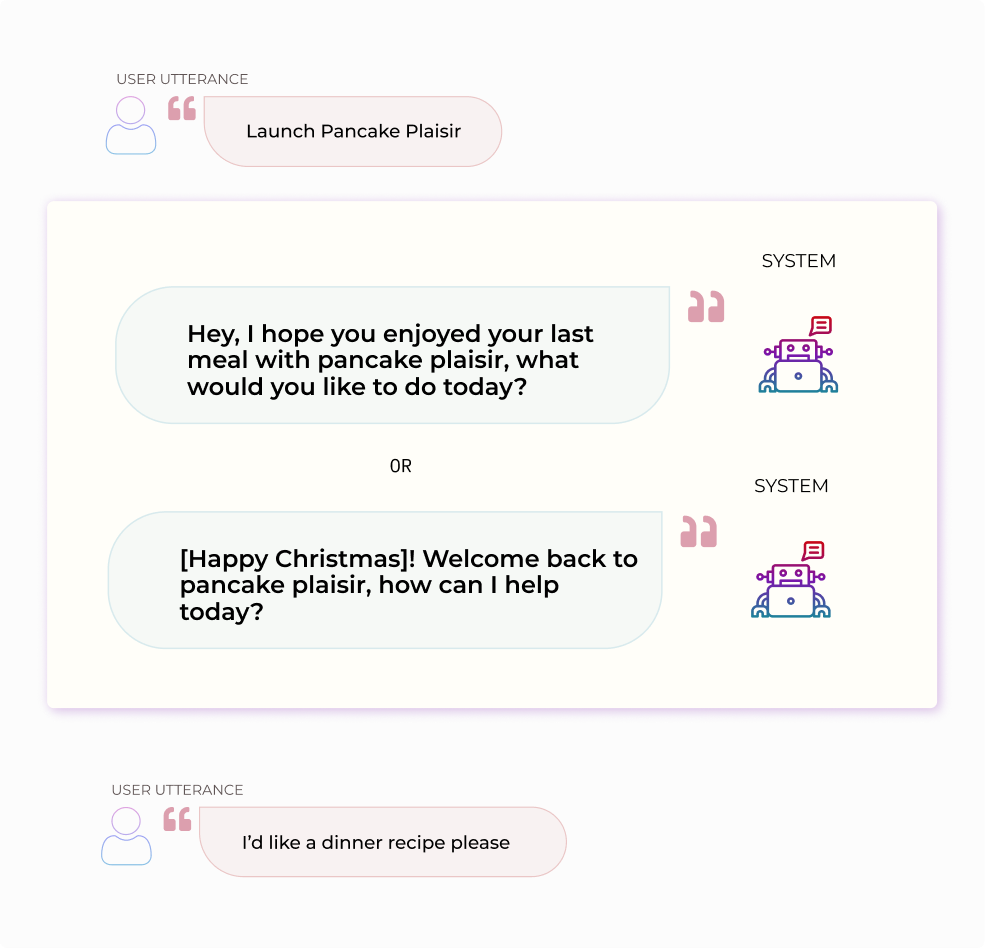

Opening Pancake Plaisir

Here I explored how the system greets the person. For example, is there a menu? Might the primary assumption be that the user wants a recipe? Is that a recipe for dinner or any recipe?

To help me I also role-played them and read them out to observe how they sounded in speech.

Sample Dialogue

Sample Dialogue

Key Insights

Key Insights

I identified that I needed to take the user’s context into account with the system greeting.

I was able to see I’d need different greetings and menu options depending on if the user was a new or returning user - and indeed how frequent a returning user.

User Flows

Using the sample dialogues as inspiration I began to work out the logic of the system. This meant working out how the system responds to the user and how it handles errors and repair paths. For example if the user does not answer a question the system has asked, what does the system do next?

Finding Recipe by Meal Type

This enables people to ask for a specific recipe for {breakfast} or for {dinner}.

Key Insights

Key Insights

It reinforced to pursue happy paths that are simple, but that are not too restrictive and cause errors later.

I initially identified a way for the system to assume for the user the meal type they want based on the user’s time of day. I later decided that this might be overly restrictive - what if the user wants it for tomorrow morning instead and not this evening?

Script

With the flows and logic defined, I wrote out the script. The script here represents the conversation between the user and system and all the possible responses the system might output, including error handling and help. This forms the interaction model of the skill.

Here I put my linguistic background to great use and validated the design against conversation design principles to improve the script. An example of this is through discourse markers to mimic conversational speech.

Key Insights

Key Insights

I needed to build more variety into the script to avoid repetitive dialogues.

By role-playing the flows and evaluating them, I observed I include logic to prevent repetition of the same discourse marker. If everything the system says begins with ‘So’ this may undermine the users’ perception of the system.

Usability Testing

With the script finished (for now) I prepped for usability testing. I used Wizard of Oz testing as a method to quickly evaluate the early design.

I used Wizard of Oz testing as a method to be able to quickly evaluate the current design.

Testing Goals

Script Accuracy

Navigation

Errors

Opportunities

Key Insights

Rating a recipe produced a wide-range of utterances, especially conversational ones such as “Tell it I liked that recipe”.

I had not anticipated that users may be so conversational with the system when rating a recipe. However I was also not confident that they would use a direct imperative, like “rate recipe”. I therefore need to account for more differences in use of language depending upon locale, age, gender etc.

Positive Feedback

Positive Feedback

Participants noted their ease at interacting with the virtual assistant.

The system’s language received positive feedback for its clarity, simplicity and prompting. This was reassuring to hear, given the lack of confidence and feelings of disappointment the participants mentioned feeling with virtual assistants.

Final Design

I proceeded to make the prioritised changes to the design has ighlighted during usability testing.

This is the current implementation of the design where users can:

a recipe

Reflection

The Pancake Plaisir Journey

This is just the beginning, we can enhance the experience by making it multimodal.

I set out to design a recipe skill that would help people to find recipes, get inspiration and learn about new pancakes. I believe the current version makes a good attempt at this, however it lacks a vital component that comes with food for a lot of people - we eat with our eyes.

Taking a multimodal approach means that the voice experience can be enhanced as it opens up the Pancake Plaisir experience to more people where they can choose their mode and channel of interaction that suits them.

What Next?

Testing the recipe steps to check cognitive load and flow.

I am confident that users can successfully select a recipe, but the recipes I have are untested in terms of the steps the users take to make the recipe. Myself being an amateur pâtissiere, I am familiar with recipe steps - especially those that are complex and designed for reading. Thus I would need to test the recipe steps to check the amount of information presented.

The Challenges

Public confidence in conversation systems presents a large challenge.

Creating a conversation that is easy for them to take part in requires careful consideration of language and cognitive load. Here I used my linguistic background to aid me. However it’s a difficult balancing act for not overpromising on the system capabilities and not restricting users to a rigid conversation.

I am grateful for my conversation design mentor Darshana who joined me on this journey to check my thinking, providing me with opportunities for deeper learning. This has left me with more thirst for being part of projects that use voice intentionally

Thank you for reading the case study for